This article talks through the RCT, a research study with two groups, one group that uses a new program and the other group does “business as usual.” In this “gold standard” design, who uses the new program is decided randomly by the researcher in the planning of the study. Cowritten with Dr. Laura Janakiefski, this guide is designed for:

- EdTech solution designers and product owners deciding whether they’re ready for an RCT.

- Curriculum publishers preparing products for external research validation and ESSA evidence submissions.

- Educators and district leaders reading RCTs and wondering why a study may show “no impact” despite anecdotal success.

While RCTs are often considered the “gold standard” for educational evidence, they often are not interpretable, not necessarily because the intervention doesn’t work, but because it wasn’t implemented or assessed correctly. This checklist can help you avoid using resources for a study that won’t meet your needs.

✅ RCT Readiness Self-Test

Ask yourself:

- Have we seen consistent results in real classrooms, outside of pilots?

- Do we have a clear implementation protocol (e.g., dosage, training model, timeline)?

- Do we have effect size estimates from previous studies to power our sample size correctly?

- Have we identified the student populations and school contexts where our intervention performs best?

- Do we have trusted district partners who are ready and equipped to implement the study?

If you checked fewer than 4 of these, hit pause on that RCT. Preliminary research is your next step.

Essential Planning Before Launching an RCT

1. Have You Demonstrated Real-World Impact?

Correlational studies show whether your intervention works as expected when used by typical educators in typical settings. If you’ve only tested with your internal team or in ideal circumstances, your RCT may fail due to poor implementation—not necessarily program flaws.

2. Do You Know Your Implementation Protocol?

Quasi-experimental and retrospective studies can surface critical needs: training structures, support models, timelines, or even ideal class sizes. Without this prep, RCTs can fall apart in the field.

3. Have You Estimated a Realistic Effect Size?

Underpowered RCTs (due to inflated effect size assumptions) lead to expensive studies that “find nothing.” Use correlational or quasi-experimental data in real classroom settings to get realistic estimates.

4. Do You Know Where It Works Best?

Not every program works everywhere. Use earlier studies to learn which grades, demographics, or school types show the strongest effects and where you expect your program to make an impact. This ensures your RCT is run in favorable conditions.

Common Missteps We’ve Seen

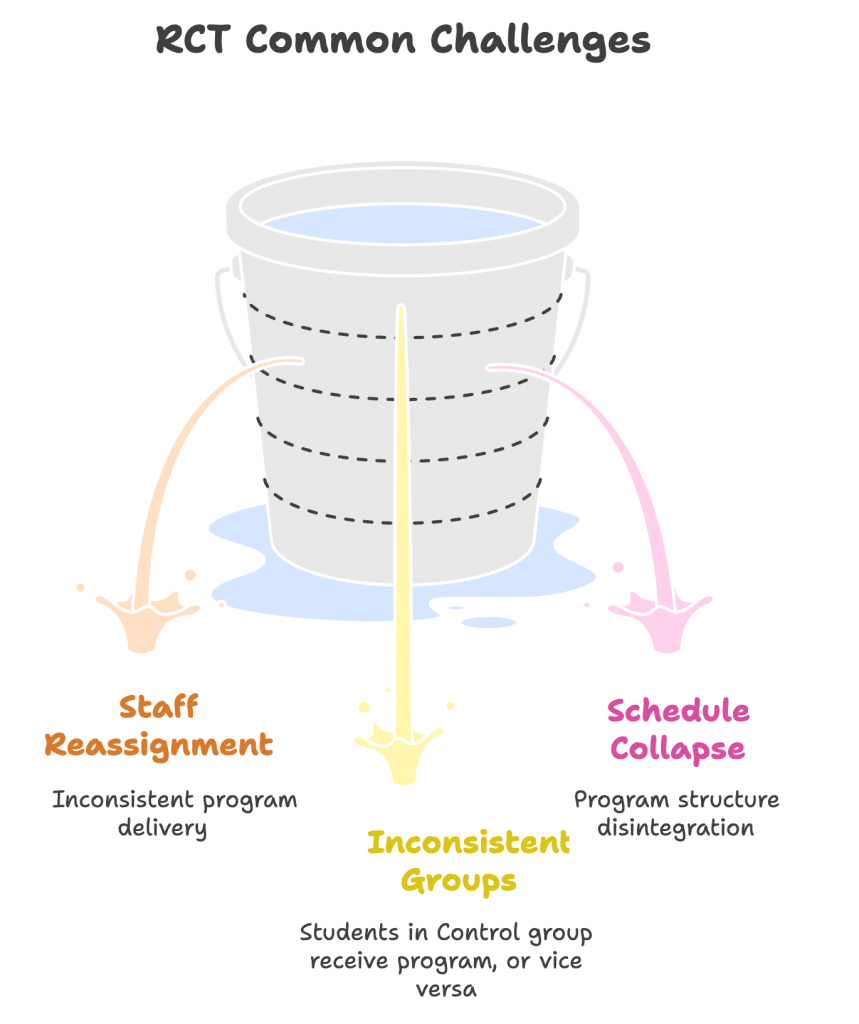

Implementation Challenges

📘 Case: A reading program with strong pilot data failed in RCT because staff were reassigned, inconsistent groups were served, and schedules fell apart. The study reflected chaos, not the program.

Solution: Conduct smaller preliminary studies to uncover organizational constraints, using frameworks like CFIR (Consolidated Framework for Implementation Research).

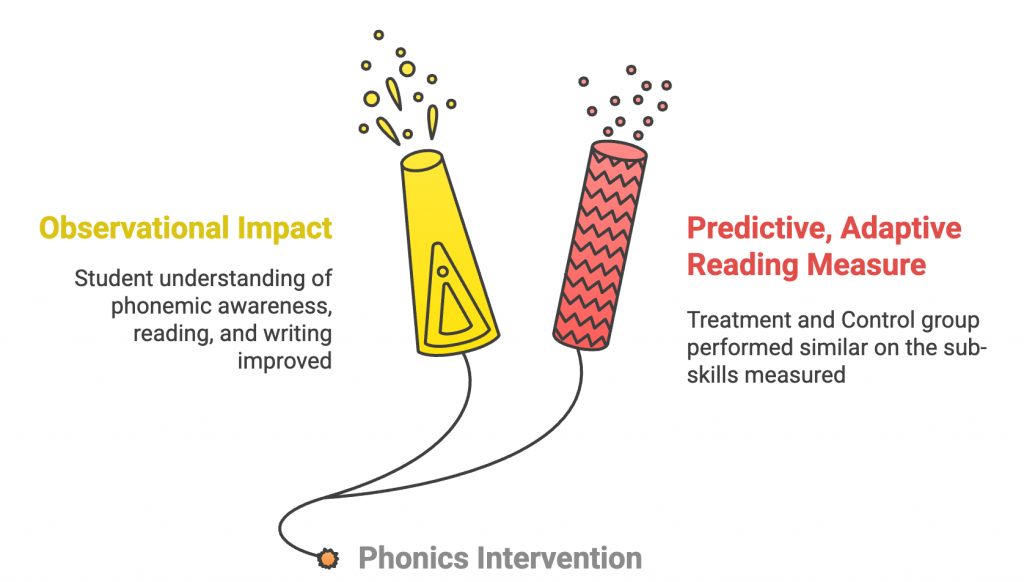

Outcome Alignment: Are You Measuring What Matters?

📘 Case: A phonics intervention showed visible student gains—but the RCT used a predictive literacy test instead of measuring actual reading. Result: impact similar to the control group despite overwhelmingly positive feedback from educators.

Solution: Test and compare multiple assessment tools in early studies to ensure alignment. Learn more about the effectiveness and reliability of commonly used measures at NCII (National Center for Intensive Intervention).

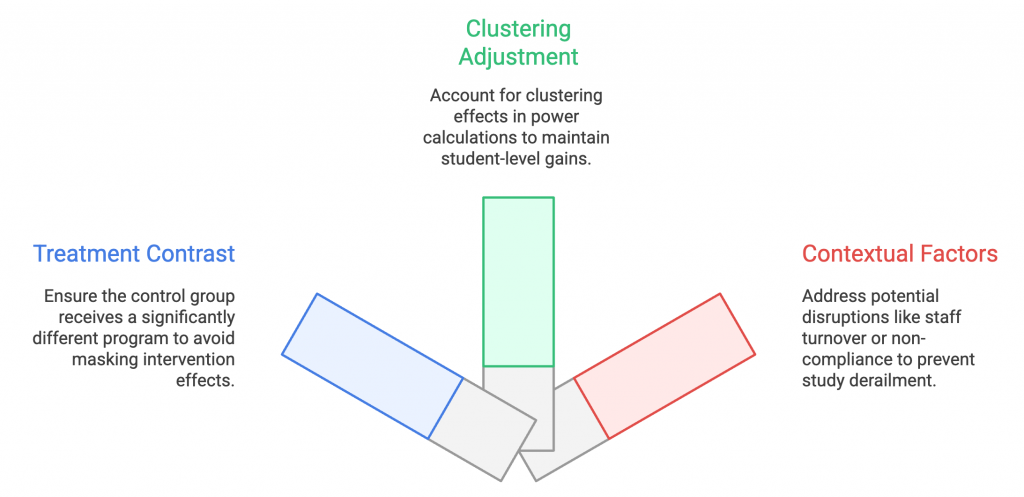

Contrast, Clustering & Context: RCT Design Pitfalls

Treatment Contrast: If your control group receives an equally strong or similar program, the RCT may show no difference even if your intervention may be effective in other contexts. 📘 Case: New math program showed no significant difference from old math program—because both were high-quality and the study window was too short.

Clustering Requirements: Adjusting for school-level or teacher-level clustering can shrink or erase apparent student-level gains. Plan for this in your power calculations. 📘 Case: A reading RCT showed significant student growth—but effects vanished when clustering adjustments were applied.

Context Sensitivity: High staff turnover, research fatigue, changing assessments, or district non-compliance can all derail even well-planned studies. Solution: Vet district partners through smaller studies first.

RCT Financial Risk & Reputation Impact

- RCTs cost 7–9x more than correlational studies and 2x more than quasi-experiments.

- Failed RCTs may still be publicly shared, depending on the funding source. Evidence registries like WWC and Evidence for ESSA retain all outcomes—good or bad.

Stakeholders (and funders) are more impressed by a deliberate, strategic research progression than by a rushed RCT with weak results.

What to Do Next

If You’re a Publisher or an EdTech Product Team:

- Start with correlational studies using usage and performance data.

- Build partnerships with 2–3 pilot districts for quasi-experiments.

- Use this data to plan an RCT with strong protocols and power.

- Map out a full research roadmap aligned with ESSA levels.

- Don’t rely on one RCT to “check the box”—sequence multiple studies.

- Contact LXD Research to help you out!

If You’re an Educator Reading an RCT:

- Ask whether the study faced implementation issues, assessment misalignment, or inappropriate contexts.

- Don’t dismiss a program just because an RCT showed “no difference”—dig deeper into the study design.

Final Word: It’s OK to Get a “Null” Result

Sometimes, well-run RCTs show no difference—and that’s valid. But a null result doesn’t always mean a program failed. It may:

- Work just as well as current options but at lower cost.

- Require better implementation supports.

- Need to target a more specific population or context.

RCTs are about learning, not just proving. A carefully sequenced research plan lowers your risk—and increases your chance of generating meaningful, credible evidence.

Want help mapping your RCT pathway? We specialize in designing multi-phase research plans that build strong, actionable evidence. Reach out to schedule a strategy session.